Detection of AI-generated texts with the AI detector

PlagAware features an AI detector that detects typical features of texts created by generative artificial intelligence ("GenAI") such as ChatGPT, Copilot, Gemini or LLaMA. We explain how this works and provide information on how to correctly interpret the results.

The most important facts at a glance

- check_circle PlagAware's AI detector recognizes texts generated by a generative AI such as ChatGPT based on stereotypical language.

- check_circle During AI detection, no data is sent to ChatGPT or other external service providers - all data remains with PlagAware.

- check_circle Natural texts can als contain stylistic elements and formulations that frequently occur in AI-generated texts. An accumulation of stereotypical AI formulations is therefore not reliable proof that a text was created by an AI.

How does PlagAware detect AI-generated texts?

Texts created or rewritten by artificial intelligences (AIs) such as ChatGPT, Copilot, LLaMA or Gemini are based on large language models (LLMs). LLMs are statistical models that predict the most probable sequence of words in a given context and can thus generate texts.

However, the prediction of word sequences based on statistical probabilities leads to certain word combinations being used more frequently than would usually be the case in "natural" - human-generated - texts.

PlagAware's AI detector identifies these stereotypical frequencies of AI-typical expressions and displays them as colored highlights in the text. Both the relative frequency of conspicuous language and the degree of conspicuousness of an individual phrase - its relevance - are evaluated. The relevance indicates how much more frequently a phrase occurs in AI-generated texts compared to natural texts.

Display of the AI detector and language analysis in the results report

Click on the AI / style analysis button to switch from the plagiarism check view to the AI detector and style analysis view. This will display various metrics for analyzing the text style and AI typicality (see below) instead of the sources found.

Click on the AI / style analysis button to switch from the plagiarism check view to the AI detector and style analysis view. This will display various metrics for analyzing the text style and AI typicality (see below) instead of the sources found.

Elements of the AI and style analysis view

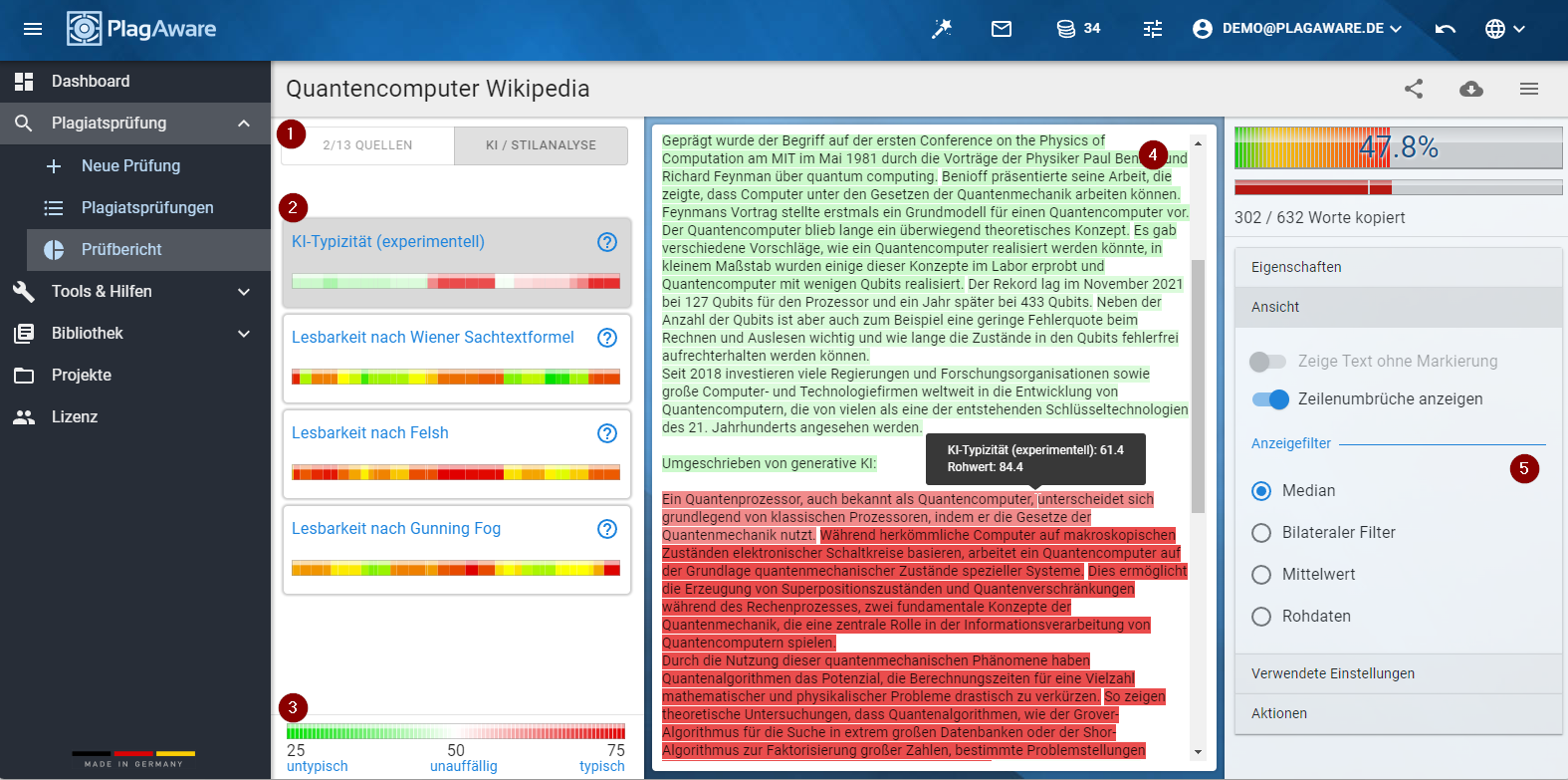

Elements of the AI and style analysis view In the AI / style analysis view, different metrics for text analysis and the corresponding check text are displayed instead of the sources found.

The additional elements in the AI detector view

In contrast to the Report on the results of the plagiarism check , the following elements can be found in the AI detector view:

- Switch between the views of the plagiarism check and the AI / style analysis.

- Selection of the text metric to be displayed in the main window of the view.

- Color legend for interpreting the selected metric.

- Test text with highlighted marking corresponding to the selected metric. If you move the mouse over the text, the (filtered) metric of the respective record is displayed together with the raw value determined.

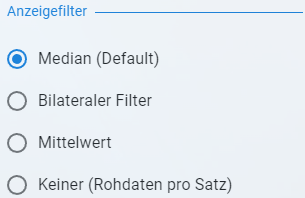

- Selection of the display filter that is used for the display in the main window.

The calculation of AI typicality

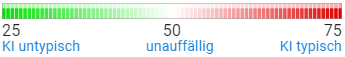

The term AI typicality is a measure of the frequency of AI-stereotypical expressions and the relevance of these formulations in a text. The AI typicality is given as a percentage value between 0% and 100% and is highlighted in the text with the colors green or red.

The percentages and colors are to be interpreted as follows:

- arrow_forward Green - AI typicality 0% - 50%: Text passages with a green background show an accumulation of formulations that are more typical of natural (i.e. non-AI-generated) texts.

- arrow_forward Neutral - AI typicality by 50%: Text passages without color highlighting indicate texts with formulations that can be found equally in natural and AI-generated texts.

- arrow_forward Red - AI typicality 50% - 100%:Text passages that are highlighted in red show an accumulation of formulations that are more typical of AI-generated texts

The stronger the color background, the greater the accumulation of typical formulations and the more typical the formulations used in the marked text. For AI typicality, this means a strong deviation from the neutral mean value of 50%.

The stronger the color background, the greater the accumulation of typical formulations and the more typical the formulations used in the marked text. For AI typicality, this means a strong deviation from the neutral mean value of 50%.

Like all other metrics, AI typicality is calculated for each sentence. As a rule, these values vary considerably around a mean value within a section, so that averaging over several sentences within a section is easier to interpret and leads to clearer results. This averaging can be found in the Display filter area in the view options on the right-hand side of the main window.

How reliable is the AI detector or AI typicality?

The AI Detector recognizes statistically significant clusters of formulations that occur through the use of AI tools.

It should be noted that these clusters do not necessarily occur when a text is created by an AI. In particular, the "creativity" of an AI, as well as the style used, can be significantly influenced by the selected AI model and by the specifications given to the AI, the so-called "prompt". This can lead to an incorrect assessment as "natural text", even though the text was actually created by an AI(first-order error).

In addition, LLMs work with statistical word probabilities determined from large volumes of text. Therefore, the measure of AI typicality is always also a measure of how accurately a text matches the style of a typical publication in the respective subject area. A text formulated accordingly can therefore be incorrectly classified as "AI-generated text", although the text was actually created by a human author(2nd order error).

The results of the AI detector are therefore given in PlagAware as AI typicality and not as AI probability or AI classification. The AI typicality represents the degree of an AI-typical formulation and is not proof of the use of an AI.

Metrics for the readability of a text

Text sections written by different authors (which includes the creation of a text using AI) are often revealed by style breaks in the text. For this reason, PlagAware offers further metrics in addition to the AI typicality of the AI detector, which are intended to detect these style breaks in the text and display them in color. In addition to detecting style breaks, these metrics can also be used to identify sections of text that are difficult to understand in order to make them easier to digest by using simpler language.

PlagAware currently offers the metrics described below for evaluating the readability of a text. Further background information on these and other readability metrics can be found in the Wikipedia article on the readability index. The readability index usually includes the number of words per sentence, the relative number of complex multisyllabic words and the number of simple monosyllabic words. However, it only makes indirect statements about more specific text characteristics, such as linguistic fluency or the use of colloquial expressions.

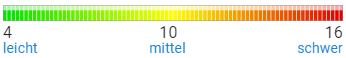

- check_circle Wiener Sachtextformel - The Wiener Sachtextformel was developed by Richard Bamberger and Erich Vanecekdient and is used to assess the readability of German texts. The metric represents the grade level in the German school system for which a text is suitable. For example, level 4 stands for a very easy text, while complicated texts are represented by higher values up to 16.

- check_circle Readability according to Gunning Fog - The readability index according to Gunning Fog - developed by Robert Gunning - is an equivalent of the Viennese non-fiction text formula for the English language and the US school system. As with the Viennese text formula, simple texts are represented by the number 4 and difficult texts by the number 16. PlagAware uses a modified version of the Gunning Fog Index, which compensates for the greater length of German words compared to English.

- check_circle Readability according to Felsh - The readability index "Felsh Reading Ease" was developed by Rudolf Flesch and transferred to the German language by Toni Amstad. In contrast to the Wiener Sachtextformel and the Fog Index, the Felsh Index has values between 100 (for very easy texts) and 0 (for very difficult texts).

The color scale indicates the readability of the respective metric as the background color of the test text. The color green always stands for a more readable text, while the color red indicates a more challenging text (regardless of the selected readability index).

The color scale indicates the readability of the respective metric as the background color of the test text. The color green always stands for a more readable text, while the color red indicates a more challenging text (regardless of the selected readability index).

Using the display filter

All the metrics described - the AI typicality and the various readability indices - are calculated for each sentence. As the metrics can vary considerably from sentence to sentence, a meaningful assessment can often only be made for a longer section of text or a certain number of consecutive sentences.

All the metrics described - the AI typicality and the various readability indices - are calculated for each sentence. As the metrics can vary considerably from sentence to sentence, a meaningful assessment can often only be made for a longer section of text or a certain number of consecutive sentences.

PlagAware therefore offers various display filters that take several consecutive sentences into account and assign an averaged value to the respective sentence. The actual data is not changed, only the display is adjusted. PlagAware offers the following display filters:

- check_circle Median - The value determined for the analyzed record is compared with the values of the two preceding and following records. The values of all 5 records are sorted in ascending order and the median value is assigned to the analyzed record. The median is particularly suitable for eliminating individual outliers and represents the default display filter.

- check_circle Bilateral filter - The bilateral filter is a digital filter that equalizes smaller local deviations but preserves larger jumps ("edges") in the raw data as far as possible. Details on the calculation of the bilateral filter can be found in the Wikipedia article "Bilateral filtering". More than the other filters, the bilateral filter preserves strong deviations from one sentence to the next, while at the same time smoothing out smaller regional differences in the evaluation of the sentences.

- check_circle Mittelwert - Like the median filter, the "Mean value" display filter takes into account the record being analyzed and its two predecessors and successors. The displayed value of the record is averaged from the values of these records, whereby neighboring records are weighted more heavily than more distant records. This filter smoothes the displayed values most harmoniously, but also creates a certain amount of blurring for a record-specific view.

- check_circle Raw values - No display filter is used, but the values determined for the respective record are displayed directly.

You can change the respective display filters according to your personal preferences and the type of text without changing the calculated metrics. The display filter is also taken into account when displaying the bar chart when selecting the metric, so that you immediately get a graphical impression of how the display filter will operate.

Case studies of AI-generated texts

Case study 1: Fully AI-generated text on the topic of "Reading and spelling skills"

Prompt used: "Create a text for a seminar paper on the topic: "Influence of artificial intelligence on the writing and reading skills of high school students!"

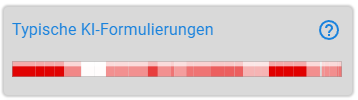

The figure shows the evaluation of the PlagAware AI detector for the text generated by ChatGPT (model GPT-4o mini). The default display filter "Median" was used in the bar chart. It can be seen that almost the entire text clearly contains AI-typical phrasing. Wording of this frequency and consistency gives strong indications that an AI model was used to create the text.

The figure shows the evaluation of the PlagAware AI detector for the text generated by ChatGPT (model GPT-4o mini). The default display filter "Median" was used in the bar chart. It can be seen that almost the entire text clearly contains AI-typical phrasing. Wording of this frequency and consistency gives strong indications that an AI model was used to create the text.

The full interactive report of the plagiarism check and the AI detector for case study 1 can be accessed via the following link: Interactive test report for case study 1 - AI-generated text on writing and reading skills.

Case study 2: Wikipedia source rewritten by AI on the topic of "quantum computers"

Prompt used: "Rewrite the Wikipedia article section as part of a seminar paper on quantum computers!"

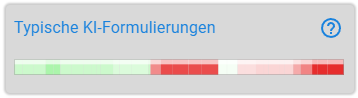

The test text consists of two sections: In the first half of the test text, the Wikipedia article was taken over unchanged. The second part of the test text consists of the text rewritten by ChatGPT. It is evident that the first half was assessed as "AI-untypical", as can be seen from the light green coloring. In the second half of the test text, on the other hand, clear signs of an AI-generated text were detected, which is indicated by the slight to strong red highlighting.

The test text consists of two sections: In the first half of the test text, the Wikipedia article was taken over unchanged. The second part of the test text consists of the text rewritten by ChatGPT. It is evident that the first half was assessed as "AI-untypical", as can be seen from the light green coloring. In the second half of the test text, on the other hand, clear signs of an AI-generated text were detected, which is indicated by the slight to strong red highlighting.

You can access the full interactive report of the plagiarism check and the AI detector for case study 2 at the following link: Interactive check report for case study 2 - Wikipedia source on quantum computing rewritten by AI.

Costs for the AI detector

PlagAware's AI detector is included in the plagiarism check and is automatically run in the background as soon as a plagiarism check is carried out. For the plagiarism check incl. AI detector, 1 ScanCredit per 250 words (search in online sources) or 1 ScanCredit per 1000 words (pure search in library texts - collusion check) is charged. Overview of the license models Further information on the pricing structure of PlagAware can be found at .

Additions and further setting options for the AI detector are planned for the near future.

Is there an intention to deceive?

When assessing the intention to deceive, it should be noted that an AI may be regarded as a permitted tool for writing your own texts. ChatGPT & Co. thus represent a logical progression of the spell checker, grammar checker or thesaurus that are used in all modern word processing programs. The question of intent to deceive can therefore not necessarily be reduced to the question of AI support when writing a text.

Rather, the focus should be on the question of whether sources that have not been cited have been unauthorizedly rewritten by an AI and passed off as the author's own texts. This issue can (as of today) only be answered by a manual assessment (e.g. oral review).